Après une magnifique première présentation au lavoir public dans le cadre du Mirage Festival le 27 février dernier, vous pourrez nous retrouver le 4 avril à la mac de créteil dans le cadre du café europa de Mac+. Vous pourrez aussi en profiter pour aller visiter l'expo Exit à la mac de créteil, le billet vous y donnant accès. ;)

Pour les personnes interessé, j'animerais aussi une conférence/workshop le 3 avril autour de l'utilisation du laser en spectacle ou installation.

En attendant et pour vous faire patienter, quelques images extraites de différents temps de travail et de résidence.

Hyperlight performance _ laboratoire d'expérimentation autour de la lumière et du vivant

Thomas Pachoud

Multimedia engineering and visual artist for live performance and interactivity.

samedi 14 mars 2015

dimanche 22 février 2015

Hyperlight _ performance _ présentation au lavoir Public dans le cadre du Mirage

Hyperlight continu son chemin. Après une semaine de résidence à Berlin à Noel suivi de 2 semaines à l'ENSATT ces derniers jours, un work in progress autour d'une performance vous seras présenté vendredi 27 au Lavoir Public dans le cadre du Mirage Festival.

La performance est pour moi un lieu d'expérimentation et de recherche, labo des possibles ou l'on explore la lumière sous ses différentes formes et textures, tant en temps que matière organique et vivante que pour ses possibilité à créer et moduler l'espace scénique et scénographique.

Elle se tisse autour de la rencontre entre deux entités, l'une vivante et l'autre lumineuse.

Quelques images issues de nos précédentes semaines de travail et de recherche. ;)

Direction Artistique : Thomas Pachoud

Composition sonore : David Guerra

Chorégraphie et danse : Thalia Ziliotis

lundi 20 octobre 2014

Lumarium - vidéo de sortie de résidence vidéophonic

Hyperlight est un nouveau projet de recherche autour de l'espace et de comment on le perçoit.

VIDEOPHONIC : Lumarium, au Planétarium de Vaulx-en-Velin from AADN on Vimeo.

LUMARIUM from AADN on Vimeo.

En utilisant le médium lumière, pour sa matière dans un espace opacifié, je cherche à créer des espaces sensibles, vivant, et interroger sur les limites de nos perceptions de l'espace.

De ce projet est né il y a 2 semaines dans le cadre des résidences vidéophonic d'AADN un premier objet, le Lumarium.

Autour d'une trame AudioVisuelle de 8 minutes, il introduit l'ensemble des recherches Hyperlight.

Voici pour vous une première vidéo de présentation du projet, réalisé lors de la résidence au planétarium de Vaulx en Velin.

VIDEOPHONIC : Lumarium, au Planétarium de Vaulx-en-Velin from AADN on Vimeo.

LUMARIUM from AADN on Vimeo.

samedi 30 novembre 2013

dimanche 6 octobre 2013

Bionic Orchestra 2.0

Bionic Orchestra 2.0 is the performance following the Bionic Orchestra made two years ago with Ezra and LOS. We work during one year with the Atelier Arts Science in Grenoble on an interactive glove to replace the iphone to control the looper.

I built for this performance different stuff. First the glove, which is able to control all loops, spatialisation, fx and whatever tools ezra is using to create is sound. The glove is based on arduino, IMU sensor and tactile interface then send control to maxmsp which interpret them to control ableton live. I also works on some video on this setup. We add three screen mapped by video around the audience, and three videoprojector are mapping the audience from above (playing with the light in smoke) and one last is mapping the stage. I'm still using VDMX for this part, and a bit of openframeworks for the advance mapping on audience. more about the full setup and the glove soon.

I built for this performance different stuff. First the glove, which is able to control all loops, spatialisation, fx and whatever tools ezra is using to create is sound. The glove is based on arduino, IMU sensor and tactile interface then send control to maxmsp which interpret them to control ableton live. I also works on some video on this setup. We add three screen mapped by video around the audience, and three videoprojector are mapping the audience from above (playing with the light in smoke) and one last is mapping the stage. I'm still using VDMX for this part, and a bit of openframeworks for the advance mapping on audience. more about the full setup and the glove soon.

lundi 8 juillet 2013

dimanche 24 février 2013

Installation Organic Orchestra

Un petit report video des premières installations construites avec la Cie Organic Orchestra. Plus de recherche autour du papier bientot! ;)

mardi 16 octobre 2012

Ezra 2.0 @ le cube

A small video interview about our performance with Ezra last month at le cube.

Ezra 29.09.12 par le_cube

Ezra 29.09.12 par le_cube

mercredi 1 août 2012

Mazut

Mazut is a performance by the Baro D'evel cirk Cie. I work on a water drop system, used in scenography, dramaturgy and which makes the base music of the show.

dimanche 4 mars 2012

Proximity tech report

I have just finished some really exciting work with the Australian Dance Theatre - ‘Proximity’.

Proximity is a contemporary dance performance which involves a lot of real time imagery. We have 3 HD cameras on-stage, filming the dancers and playing with their images in real-time then re-projecting it back onto three 4:3 screens in portrait mode at the back of the stage.

playing with time and replicating the dancers in space,

photo Chris Herzfeld, camlight production

playing with point of view, of the camera, of the audience, of the dancers,

photo Chris Herzfeld, camlight production

playing with some fx and adding some custom material to the imagery, mostly through custom Open Frameworks apps.

photo Chris Herzfeld, camlight production

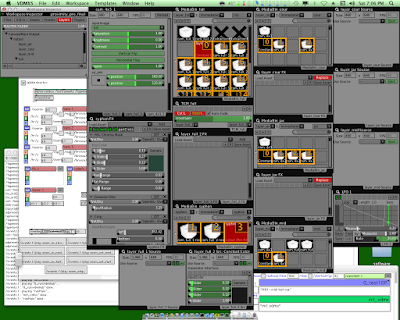

My setup is mostly made around VDMX5, used as a flexible visual engine. I really love to work with this software which allows a lot of flexibility of the setup, and is very customisable. You can also feed this with Quartz Composer at any level which is great to have even more flexibility.

For some of the effects used in the performance, I also build a custom Open Frameworks app, which is fed via syphon and then sends back the affected video through syphon to vdmx for displaying on the screen.

All of the setup is then sent through Maxmsp, running tapemovie, a software built with didascalie.net french team to record cues, manage fx parameters, layer ordering, layer source (...) during the time of the performance.

I operate the show from the side of stage wings with an ipad running the lemur apps. The two important aspects of the lemur apps compared to touchOSC or any other are 1) the ability to script and automatise processes and behavior right on the lemur, 2) the ability to send commands to multiple OSC addresses. This means I can send to my main mac pro and to the spare mac pro at the same time. This also means with one button on the ipad I can make everything jump from the primary mac to the back-up mac instantly. It also allows me to send different information to different apps (maxmsp and vdmx in that case).

On the subject of convenience and reliabilty, all of our video process is driven through SDI. That means some tricks are required for the output as the SDI format is limited to common HD video signals (i.e.16:9 when our actual output is 4:3 rotated by 90). The big advantage to having everything running SDI is that all signals pass through a 16x16 blackmagic SDI matrix, so anything can then go anywhere. To control the blackmagic router, I built a little addition for OpenFrameworks, control from max through OSC, available on my github.

As I’m working on 3 screen in vertical 4:3, my resolution for the output is 1466x640 (3x480x640 + 2x13px dropped for the overlaping). My first process in VDMX is to send my camera into a custom camera plugin to re-size it in 1466x640 (or 480x640 depending on if made to use on one or 3 screens), add some colour controls, position and zoom control. As most of the time I’m using my output on the 3 screen, I get two plugin for the main output, each one being fed by one of the inputs from my blackmagic studio duo in 720p. I have a third camera plugin made to feed the screen in small size (640x480). This one can be fed by each of my input or by the output of the 3 screen (to replicate the image on the sides).

I used one main group of two layers with autocrossfading which is the size of the images on the 3 screen. Then I get 3 side layers which is each one used for one screen.

Finally, all that layers entered into a group which is driving the output. This group is composed by one fx always activate which cut my image into 3 480x640 images, rotate them by 90 degrees and had a GLSL keystone to each one for easy setup and put them into 3 x 720p image for SDI output to the projector (by adding black on top and bottom).

Then I get one more layer used for monitoring the screen and syphon output. It is most of the time feeding through my main layer for monitoring output, and a few times from the camera when it needs to use syphon to feed my custom OF. This apps then sends a feed back through syphon with the fx in it. The apps render not only the fx but the camera feed as there is frame latency (1 or 2 frame delay) in between.

The custom OF apps is mainly use for two fx in the show. Both are based on a dancer detection through openCv. I first make a background substraction based on the colour then a threshold on the black / dark to detect their contour.

The first effect is a particles effects made with an openCL Kernel, mostly based on Memo.tv particles kernel modified for our need.

The second fx is a kind of web drawn around the dancer. For this one I basically simplified the contour, then using the delaunay algorithm to draw lines in between the dancer.

All the control of this effects is made with memo simpleGuiToo, modified for the occasion to receive OSC command. I have an OSC singleton pushing a message to the OF apps, received by each of my interface elements and OF class. That was the easiest way I found to be able to draw interface quite fast and be able to control it through OSC message instantly. The OSC message have just to have the same name I give to my interface elements.

Most of the other fx use in the show are build in Quartz Composer. Some of them are available on my github too.

Finally I would say a huge thanks to Tom Butterworth (bangnoise) and Anton Marini (vade) for their syphon technologies, which makes all that possible. Thanks again to Tom Butterworth to all the work on the QC sampler to make it more light and more stable. Thanks to vidvox for their amazing software. Thanks to all of the openFrameworks team and community. Thanks to Renaud Rubiano and the french team of didascalie.net, around tapemovie.

Inscription à :

Articles (Atom)

.jpeg)